SMWRDFConnector Architecture - mental picture

Sat, 2010-06-19 16:49 | by Samuel LampaThis is my current mental picture of the architecture of the parts included in the RDF import/export functionality I'm implementing for Semantic MediaWiki as part of my Google summer of code project. I just got the ARC2 based store functional. The functionality still to be implemented in "dashed" lines:

- - - - - - - - - -

| Export | | Import |

- - - - - - - - - -

^ |

| v

- - - - - - - - - - ---------------

| Equiv URI handler |->| SMW Writer |

- - - - - - - - - - ---------------

^ |

| v

--------------------- ---------------

| SPARQL+ Interface | | SMW |

--------------------- ---------------

^ ______/ |

| v v

----------------- ----------------

| ARC2 Store | | MediaWiki DB |

----------------- ----------------

Working ARC2 RDF Store connector committed

Sat, 2010-06-19 02:28 | by Samuel LampaNow I have a working RDF Store connector for Semantic MediaWiki, that uses ARC2:s RDF store, rather than SMW:s built-in store. This will allow to take advantage of functionality in ARC2, such as possibility to set up a SPARQL endpoint etc.

Thanks to Alfredas Chmieliauskas for the Joseki store connector in the SparqlExtension for SMW, which this connector is heavily based upon.

The ARC2 connector implements the same amount of the SMWStore API as the JosekiStore, but I'm not yet sure if more needs to be implemented, for the things we want to do (general RDF import/export). Gotta figure that out.

The code is available in the google code repository trunk, and install instructions on the gcode wiki.

Feel free to try it out, but be warned that it has been only very briefly much tested at all yet!

Back on track GSoC:ing

Sat, 2010-06-19 00:22 | by Samuel LampaBack on track GSoC:ing. Follow progress at my twitter.

Thesis presentation done

Fri, 2010-06-18 22:55 | by Samuel LampaI presented my MSc thesis project "SWI-Prolog as a Semantic Web tool for semantic querying in Bioclipse" today. (Report for download here

Find the slides below. I expected a very non-informatics audience (though some of my fellow Bioclipse:rs showed up =) ), had only 20 minutes, and lots of non-common-knowledge things to introduce, so these are really mostly a bunch of pictures for talking through the basics of semantic web, prolog and Bioclipse.

Turning back to the GSoC project now!

GSoC project started

Fri, 2010-06-11 02:15 | by Samuel LampaHave started actual coding for GSoC this wednesday (start was 2 weeks delayed because of exams, which I'll catch up). Still just getting up to speed, but looking now into the PHP RDF framework ARC, whose RDF store will replace the currently used RAP store in Semantic Mediawiki. Usage ARC itself looks very straightforward. Just have to figure out the SMW Store API. Looking at the SMWRapStore2.php now, to get an idea.

If you want to follow my progress in (approximate) real-time, then see my twitter.

Report approved - preliminary version for download

Thu, 2010-06-03 22:07 | by Samuel LampaMy degree project, titled "SWI-Prolog as a Semantic Web tool for semantic querying in Bioclipse" is getting closer to finish. Now my report is approved by the Scientific Reviewer (thanks, Prof. Mats Gustafsson), so I wanted to make it available here (Download PDF). Reports on typos are welcome of course! :)

First look at code - Thoughts and questions

Thu, 2010-05-13 17:45 | by Samuel LampaCoding for my GSoC project will start for real in June, 9th or so, but I just had a first look at code, to start wrapping my head around the things involved. I installed the following on my local SMW:

I tested the RAP based SPARQL endpoint, played a bit with SMWWriter, and tried to get some grips of how to best use existing functionality for implementing RDF import/export.

Some questions that arose (for Denny in the first place, I guess, but feel free to comment):

- For implementing a SPARQL endpoint, should the ARC RDF store be implemented, similar to how RAP implements a separate store that mirrors the content in the SMW, or is there any way to get around the need for an extra store?

- An alternative starting point to implementing an ARC store, In addition to the RAP store connector already available in SMW, seems to be the JosekiStore connector in SparqlExtension. But of course, one can look at both.

- Where to find the relevant functionality related to the equivalent URI property found? In SMW_Exporter.php? other places too?

- SMWWriter depends on an outdated version of PageObjectModel, while the latter

seems to have stabilized (no changes since 2008). What to do about that?

Playing with SMWWriter

Thu, 2010-05-13 16:53 | by Samuel LampaWe are probably going to use SMWWriter for extending the RDF import/export functionality of Semantic MediaWiki, so I wanted to test it out a bit.

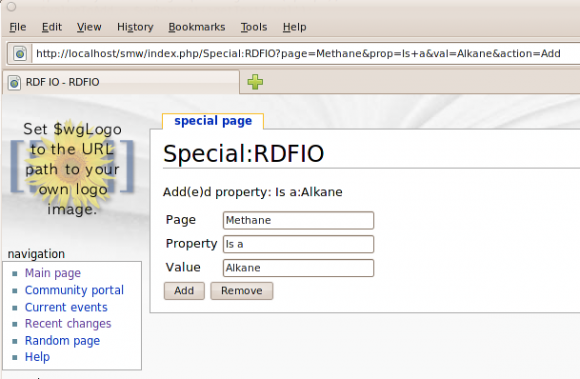

With some copy and paste of code from this page, I quickly had a MediaWiki Special Page set up, where I could make use SMWWriters internal API to implement a crude form for adding or removing "triples" in my Semantic MediaWiki. See Screenshot:

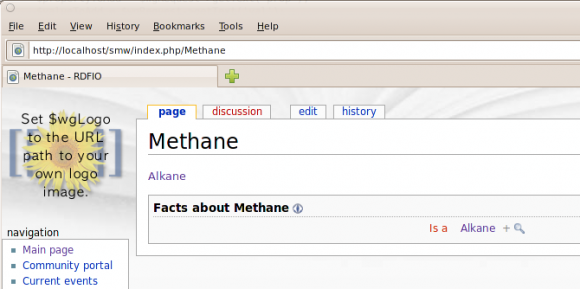

And the result, on the Methane page:

Looks promising. Connecting this with some ARC functionality for parsing SPARQL and RDF/XML, should make a big step in the right direction.

Testing set up of SPARQL endpoint for SMW using RAP and NetAPI

Thu, 2010-05-13 04:25 | by Samuel Lampa(For my internal documentation, mostly)