Blogs

Screencast: Installing Semantic MediaWiki and RDFIO from scratch on Ubuntu

Sat, 2010-07-17 01:48 | by Samuel LampaIn a previous blog post I demonstrated with a screen cast the RDFIO extension for Semantic MediaWiki but nothing on installation.

By testing I realized that the install procudure was VERY painful. I have now (with much valuable help from Oleg Simakoff) corrected a number of errors in the instructions and the code, and added to the install instructions commandline snippets for linux/ubuntu. I also created a screencast which goes through the steps from scratch (except Apache/MySQL/PHP setup), in a little more than 5 minutes. Hope this makes things easier for you testers! (And as you might try it out, please report any bugs or issues in the issue tracker!)

Sorry for the low volume level! Didn't realize that while recording ... :/

Screencast: RDF Import and SPARQL "Update" in Semantic MediaWiki

Fri, 2010-07-16 19:41 | by Samuel LampaSo, for those of you who might think the Install instructions for the RDFIO Semantic MediaWiki extension I'm working on are a bit daunting but would like a glimpse of what my GSoC project is up to anyway, I created a short (3:20) screencast demonstrating (ARC2 based) RDF Import and SPARQL "Update" functionality for some example data. (Sorry for the lame speaking ... :P ... didn't sleep for a looong time )

The screencast shows how you can import RDF/XML into Semantic MediaWiki and then use the SPARQL endpoint to insert or remove data to/from articles, even using the original format of the RDF that you imported earlier.

(For you who decide to try to install, please have a look at the error fixing happening in this thread.)

Moved to new SVN repository (please update links)

Thu, 2010-07-15 23:45 | by Samuel LampaI just moved to a new Google code repository, reflecting the name change of the MediaWiki extension from "SMW RDF Connector" (it's awfully long, isn't it) to "SMW RDFIO", or just "RDFIO", so please update your links!

- Main repo url: http://code.google.com/p/smwrdfio

- Keep track of my check-ins: http://code.google.com/p/smwrdfio/source/list

See also the newly created extension page, which will be the hub for information about the extension in the future.

GSoC status update July 14

Wed, 2010-07-14 12:03 | by Samuel Lampa(Figured I better do regular status updates, as a lot of small things tend to get missed if blogging only when there is something to show off)

As you might have seen among the GSoC2010 tagged posts I've had a rudimental RDF/XML import, and a SPARQL endpoint (only for querying so far!) up running for a while. You should be able to set up these yourself by following one or more of the instructions in the Google code repo:

- ARC2 Store Install (required for SPARQL endpoint)

- ARC2 SPARQL Endpoint Install

- RDF Import Install

I have since worked a bit on some use cases, which revealed a lot of intricacies to take into account on RDF import. One of them was a spinoff discussion, from a blog post by Egon Willighagen, which quite nicely outlines one of the motivations for having general RDF import in MediaWiki (read post, read discussion).

The last few days I've been working on heavly refactoring the import code, so that it is more general and easy to modify in new ways. There is still a lot to be improved in the code, like error handling, documentation, adding more options etc, so feel free to give feedback on the code! (Especially RDFImporter.php and EquivalendURIHandler.php, and preferrably use the mailing lists: semediawiki-devel, semediawiki-user or mediawiki-l)

The RDF import seems to be the most challenging part in my project (and on which the export feature heavily depends) - since it is the part where I'm breaking a bit of new ground, so here feedback is much welcome.

Choosing wiki titles for RDF entities on import - Feedback wanted

The one most challenging issue is about how to select reasonable wikititles to use for RDF entities on RDF import, based on the RDF data (one relevant blog post here). The question of being able to export the page with the original URI, should not limit the choice directly, since this is already solved by storing the original URI as a property on each page.

The thoughts we have had so far - in short - is:

- First look if the RDF entity in question has one of a list of properties, in prioritized order, that should be used as wiki title.

- The first of these, could be a special property which can be used to manually specify this by including it in the imported RDF, like "hasWikiTitle".

- The suggested list or properties so far can be seen in this blog post. This list should of course be configurable, and one question is also how to best implement this configuration? A setting in LocalSettings.php? A wiki page?

- If no matching property is in this list, then the label for the RDF entity should be used. For example, if the entity:s URI is http://bio2rdf.org/go:0032283, then 0032283 is used.

How to configure namespace prefixes / preudo-namespaces?

Using only the label of course has the risk that multiple RDF entities converts to the same wiki title, which is not acceptable for example if using the wiki as a "one time RDF editor", which is one of the motivations for this project.

To solve this, one alternative (as a configurable option) could be to use a pseudo-namespace in the wiki title (e.g. "go" in the above example, which would result in "go:0032283" as the wikititle). This could be configured by creating a mapping between base URI:s and pseudo-namespaces (.e. "http://bio2rdf.org/go:" and "go", in this case).

But then there is the question how to configure this mapping. We've been thinking of a few options:

- Let it be configured in the incoming RDF, by using a custom predicate "hasPseudoNameSpace"

- Store a config in a Wiki article

- Config in LocalSettings.php

- On submitting data for import, analyze it first and present a screen with all the base URI:s used, with fields to manually fill in the pseudo-prefixes to use.

- Any combination of the above

I will be working ahead, and try to figure out the most reasonable strategy together with Denny (who is my GSoC mentor), but feedback and comments are always welcome! (As said, preferrably send feedback on the mailing lists; semediawiki-devel, semediawiki-user or mediawiki-l!)

If you want to follow the project progress, see the status page for options

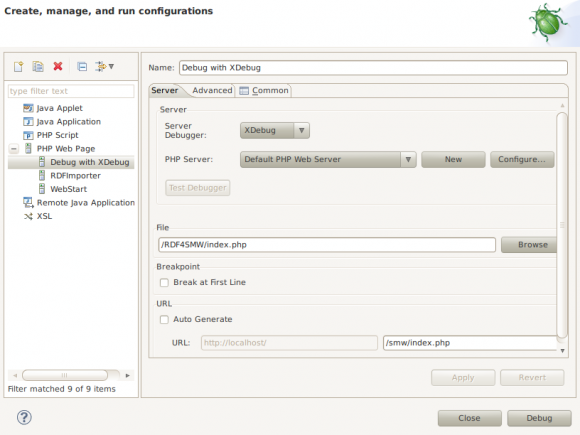

Debugging MediaWiki extensions with Eclipse for PHP and XDebug on Ubuntu 10.04

Tue, 2010-07-13 17:51 | by Samuel LampaI ran into some troubles with the debugging with XDebug in Eclipse for PHP Developers / PDT (breakpoints stopped to take / catch, after I changed location of my www folder - which I figured out later), so I wanted to document the full setup procedure it here. I mainly followed this blog post. (Assuming you have apache and php set up!).

- Download Eclipse for PHP developers

- I'm using the Linux 32 bit version

- Because of a bug with PDT for Eclipse Helios, I chose to go with Eclipse PDT SR2 for now.

- Install XDebug by typing:

apt-get install php5-xdebug

- Edit /etc/php5/conf.d/xdebug.ini to look something like this:

zend_extension=/usr/lib/php5/20090626+lfs/xdebug.so

xdebug.remote_enable=On

xdebug.remote_host="localhost"

xdebug.remote_port=9000

xdebug.remote_handler="dbgp"

# xdebug.remote_log="/tmp/xdebug.log"

- The log line can be good to enable in case you run into troubles.

- The first line can be different depending on PHP version. Use the line that you can find in the file (which is the default for your version of Ubuntu):

/etc/php5/conf.d/xdebug.ini.ucf-dist

- Restart apache:

sudo apache2ctl restart

Configure Eclipse

- Create a PHP Project. Mine is named "RDF4SMW".

- Choose Run > Debug Configurations in Eclipse

- Turn the configuration to your web server setup. For me, it looks like this ("smw" is the folder where MediaWiki is installed):

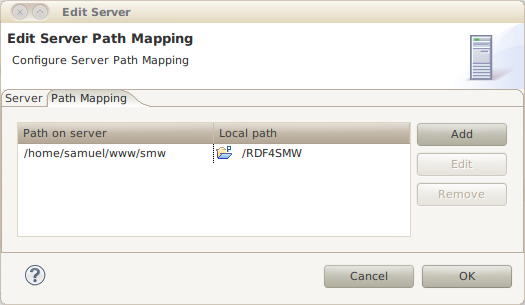

Custom location of www folder

Then an important thing If you have changed the location of your www folder from /var/www, then don't miss this! This was what caused the problem for me, with breakpoints not taking:

- Click the "Configure" button in the Debug configuration screen seen above

- Click the "Path mapping" tab

- Configure according to the location of your www folder. My current config looks like so:

- This should be it. Happy debugging!

Considering name change: SMW RDF Connector --> SMW RDF IO

Fri, 2010-07-09 20:41 | by Samuel LampaMost probably, the extended RDF import/export in Semantic MediaWiki, which I'm working on, will be made an extension, to start with (at least that's my idea).

First I was calling it "RDFIO", but in fear of being a too general name, or too undescriptive, I switched to "SMW RDF Connector", and the project currently lives in a repo called SMW RDF Connector.

Now, realize this is awfully long, I'm thinking to go back to "RDFIO", or rather "SMW RDF IO" again, before it's "too late", (haven't even created a MediaWiki extension page yet). Seems like it's the most clever, short choice after all.

Any comments?

New release of Bioclipse (2.4)

Fri, 2010-07-09 18:46 | by Samuel LampaBioclipse, the open source chemoinformatics and bioinformatics workbench, which was the context for my thesis project, just released a new major version, 2.4:

Also, the site got a major overhaul just a few days ago, so why don't you have a look! :)

Final version of degree project report

Mon, 2010-07-05 21:01 | by Samuel LampaThe last administrative details of my thesis project are now finished, and the report is now available in final form, for download as PDF in on this page (no 14 in the list), or this direct link. (The title of the project was "SWI-Prolog as a Semantic Web Tool for semantic querying in Bioclipse: Integration and performance benchmarking").

RDF properties to use for wiki titles on import - suggestions?

Wed, 2010-06-30 11:20 | by Samuel LampaOne of the things we try to do in my GSoC project is to select suitable wiki titles when importing arbitrary RDF triples into Semantic MediaWiki (The full RDF URI:s are very ugly to use as wiki titles!).

Simply shorting the namespace in the entitiy's URI to it's prefix (as specified in the import data) could be a general fallback (see screenshot) but for many types of data it could be nice to make use of a property that puts some "natural language" label for the entity instead. We came up with this list of properties so far:

- http://semantic-mediawiki.org/swivt/1.0#page

- http://purl.org/dc/elements/1.1/title

- http://www.w3.org/2004/02/skos/core#preferredLabel

- http://xmlns.com/foaf/0.1/name

More suggestions?

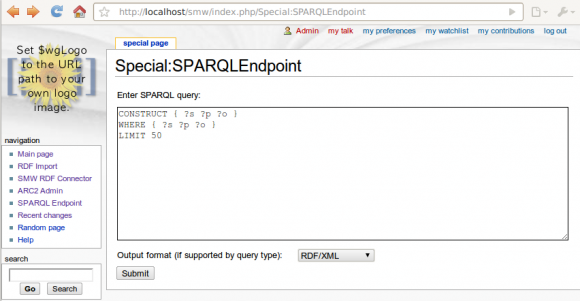

ARC2 based SPARQL Endpoint for Semantic MediaWiki up running

Tue, 2010-06-29 22:25 | by Samuel LampaI now managed to create a SPARQL endpoint for Semantic MediaWiki, in a MediaWiki SpecialPage, based on the ARC2 RDF library for PHP (including its built-in triplestore). See screenshot below, and code in the svn trunk. (I have to say I'm impressed by the ease of working with ARC2!)

Code is still quite ugly and the "Equivalent URI" handling that we talked about is still not implemented. Will turn to that now, while doing some refactoring (more object oriented etc).